Face recognition has been one of the technologies used in various walks of the modern life. Facial recognition is used in the applications like security and defense, retail and marketing, healthcare and hospitality. Security and defense holds really a great altitude of usage of facial recognition in comparison with the other applications.

The origin

of facial recognition dates back to 1800s. As immediate as the camera was

invented, law enforcement started utilizing the potential of facial recognition

by recording the images of the criminals to identify repeated offenders.

Despite

such critical application, San Francisco decided to ban the usage of facial

recognition from May 2019. San Francisco is the first country in the world to impose

ban on facial recognition. Recently, the Government of Boston joined the list

by imposing ban on facial recognition.

There was a

situation where five watches were stolen from Shinola retail store, which were

of $3800 worth. As a part of investigation, the police collected the CCTV footage

and ran a facial recognition software with the person identified from the

footage. The software recognized the person as Robert Julian-Borchak Williams

(42 YO) hailing from Farmington Hills, Michigan. Finally, charges against

Williams were dropped off for the reason that the facial recognition software had

a false hit in identification.

In this

context, the city council of Boston voted to ban the use of such software. This

measure will reach the Mayor of Boston Marty Walsh. One of the sponsors of the

bill Councilor Ricardo Arroyo noted that the facial recognition software used

by the police is inaccurate for the people of color.

Apart from

this practical example of facial recognition software error, MIT has conducted

an analysis on understanding the accuracy of such software. This study revealed

that the error rate of light-skinned men is 0.8% and 34.7% that of dark-skinned

women.

|

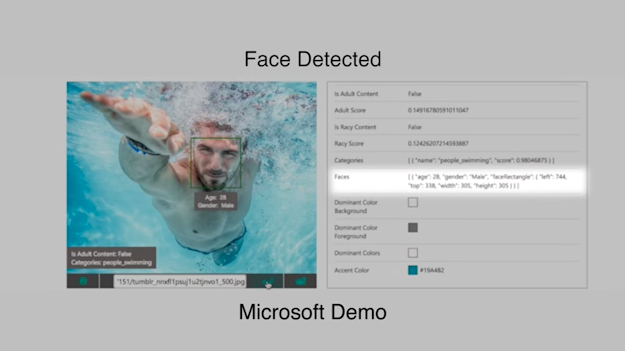

| Light-skinned man identified by Facial recognition software |

|

| Dark-skinned woman identified by Facial recognition software |

It has an obvious racial bias and that's dangerous. But it also has sort of a chilling effect on civil liberties. And so, in a time where we're seeing so much direct action in the form of marches and protests for rights, any kind of surveillance technology that could be used to essentially chill free speech or ... more or less monitor activism or activists is dangerous.

The Boston

Police Commissioner William Gross said that the modern technology is not

reliable. In his words, “Until this technology is 100%, I'm not interested in

it. I didn’t forget that I'm African American and I can be misidentified as

well.”

Based on

the recent revolution against racism, William’s case is really alarming. Just

because of a software bug, he was accused and appeared in the court. In the era

of breaking all the inequalities, the software we learn, the algorithms

implemented in Machine Learning software and the application of such algorithms

should be designed enhanced care.

0 comments:

Post a Comment