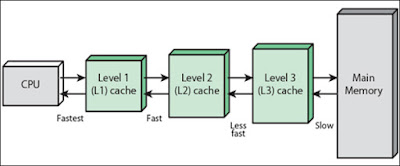

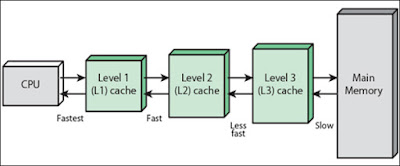

Cache is the memory that accelerates off-chip memory access for the frequently used data. The modern generation chips have three or four different levels of cache memory. However, with the emerging need of better performance and faster memory access, even the modern age caches are not able to serve the purpose.

Researchers at MIT's Computer Science and Artificial Intelligence wing have designed a new cache memory that has the capability of reallocation of the cache hierarchy based upon the specificity of the programs.

What you would like is to take these distributed physical memory resources and build application-specific hierarchies that maximize the performance for your particular application. And that depends on many things in the application. What’s the size of the data it accesses? Does it have hierarchical reuse, so that it would benefit from a hierarchy of progressively larger memories? Or is it scanning through a data structure, so we’d be better off having a single but very large level? How often does it access data? How much would its performance suffer if we just let data drop to main memory? There are all these different trade-offs. - Daniel Sanchez, an assistant professor in the Department of Electrical Engineering and Computer Science (EECS)

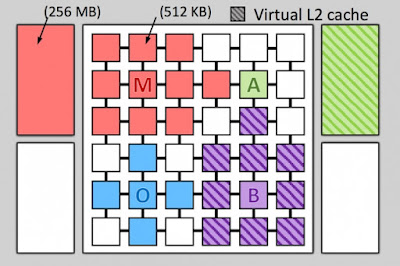

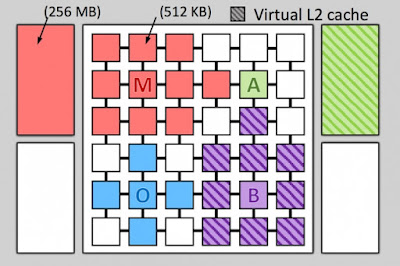

Sanchez and his co-authors from MIT has recently came up with a new cache system called Jenga at the International Symposium on Computer Architecture. As far as desktop PCs are concerned, quad-core PCs have become most common, where as 16-core processors for servers. In perception of the demanding need of high computing chunks, the need of number of cores in a processor may increase rapidly.

|

| Levels of cache memory |

In the multi-core chips, every core is embedded with two levels of private cache, where another cache is shared by all the individual cores of a chip. Some modern technology chips are coming with DRAM cache memories. In the concept of cache memory and cores of a chip, the distance between the cache memory and the core really matters. The new Jenga is capable of making difference between physical locations of individual memory banks, that finally interact with the shared cache memory. The Jenga system estimates the time required to fetch the data from a core, which is called 'latency' of the system.

The Jenga system is based on the older cache memory mechanism devised by MIT called Jigsaw. When compared Jenga with Jigsaw, the Jigsaw generates more complex cache hierarchies. With the adopting computational shortcuts, Jenga changes its memory allocation at the time intervals of 100ms, in order to cope up with the program changes and their memory access patterns. When a 36-core chip is simulated with Jenga cache hierarchy, 30% improvement in performance is observed, more than 85% lesser power consumption.

|

| 36-tile Jenga system running four applications |

0 comments:

Post a Comment