If you’re reading this article, thanks to the optical communications technology. Optical communication is part of our everyday internet access. Over past years, scientists have progressed transmitting both data and power various devices from a distance.

Murat Uysal

and his team from Ozyegin University, Turkey has published a paper “SLIPT for

Underwater Visible Light Communications: Performance Analysis and Optimization.”

This paper provides a detailed analysis of a new algorithm that helps

transmitting of both data and power to devices underwater using light, with

highest ever efficiency that is achieved till date.

|

| Source: Google images |

It’s not so

much that humans explored the underwater mysteries throughout centuries. From

past few years, scientists are deploying underwater sensors that help them to gather

and study the information. The current method of transmitting signals

underwater is using sound waves. Though sound waves can travel long distances

through the watery depths, they only carry very less amount of data in comparison

with light waves.

Visible light communication can provide data rates at several orders of magnitude beyond the capabilities of traditional acoustic techniques and is particularly suited for emerging bandwidth-hungry underwater applications.

Murat

Uysal, a professor with the Department of Electrical and Electronics

Engineering at Ozyegin University, in Turkey.

With respect

to replacing the batteries, it is very difficult to manage and maintain sensors

or other electronic devices in such environments. For the devices those are

able to work with solar panels come with an added advantage – light signals can

be used to transmit data as a part of harvesting the solar energy. When such

environments created, an autonomous vehicle can be setup in order to transmit

and receive data from the sensor.

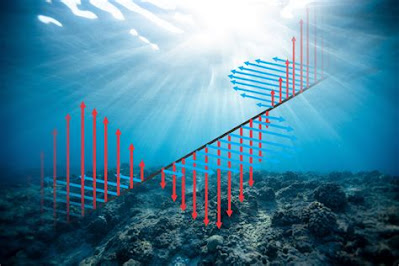

The power

derived from the light signals received from the sensor can be split into Alternative

Current (AC) and Direct Current (DC) components, where the AC component is

capable of transmitting data and the DC component is capable of acting as a

power source. Murat Uysal calls this process AC-DC Separation (ADS) method.

This is the gist of the paper that he published.

|

| Source: Google images |

The team

has also proposed another method that strategically switches between energy

harvesting and data transfer and achieve performance optimization in switching

between these two. This process is called Simultaneous Light Information and Power

Transfer (SLIPT). However, the SLIPT method has not surpassed the ADS method in

terms of performance.

The feasibility of wireless power was already successfully demonstrated in underwater environments [using light], despite the fact that seawater conductivity, temperature, pressure, water currents, and biofouling phenomenon impose additional challenges.

These methods of optical communication are yet experimental. According to Murat, the SLIPT method is more potential commercially. Advances in these methods can ultimately be used as underwater modems using visible light. However, scientists are continuing their research in optimizing the method of implementing sensors underwater, powering them remotely and studying the oceans and the underwater life.